AGI Hype vs AI Reality

Thoughts after an AI conference

If you were a casual observer of the current discourse around artificial intelligence, and all you consumed about the topic were articles by tech journalists and pronouncements from the most prominent AI startup CEOs, you could be forgiven for having the impression that everyone was fixated on a singular question: when will artificial general intelligence (AGI) arrive and utterly transform the world?

It is easy to get lost in the knock-on debates, such as the internecine struggle between doomers who fear the arrival of a malevolent machine super-intelligence and the accelerationists who promise a dawning eschaton of unlimited economic growth. (Both factions share the assumption that AGI is both inevitable and imminent.)

There is plenty of skepticism of AGI boosterism, like how we’re currently hitting the wall of diminishing returns on the supposed “law” of scaling, how slippery definitions of AGI are, and so on. But this is not a post about why the AGI hype may be incorrect.

No, this post is about the vast disconnect between the public discourse about artificial intelligence and the work of the thousands of programmers and scientists who are actually building, deploying, and innovating with artificial intelligence. For them, AI is not an abstract debate about a potential future but a function of present reality. And they generally could not care less about AGI.

I had the opportunity earlier this month to attend the thirty-ninth annual conference of the Association for the Advancement of Artificial Intelligence (AAAI; often said as “triple-A, I”). The simple fact that this conference has been going since the 1980s is itself a reminder that the heightened public discourse since the public release of ChatGPT in 2022 is coming late in the game. Quite a few of the older attendees at the conference were clearly annoyed at how relative arrivistes to the industry were dominating the public conversation.

The scale of the proceedings was remarkable, especially the poster sessions. You see, at many disciplinary conferences the poster sessions act more or less like a holding pen for graduate students who don’t have the clout to get onto the more prestigious panels, which are dominated by full faculty and senior scholars.

But the poster exhibit hall at AAAI was the true beating heart of the conference. I was told that ~1900 posters would be presented in batches of several hundred at a time. They were well attended with people flocking around for explanations from the authors, faculty walking through to keep abreast of the latest research, and corporate headhunters recruiting for AI startups.

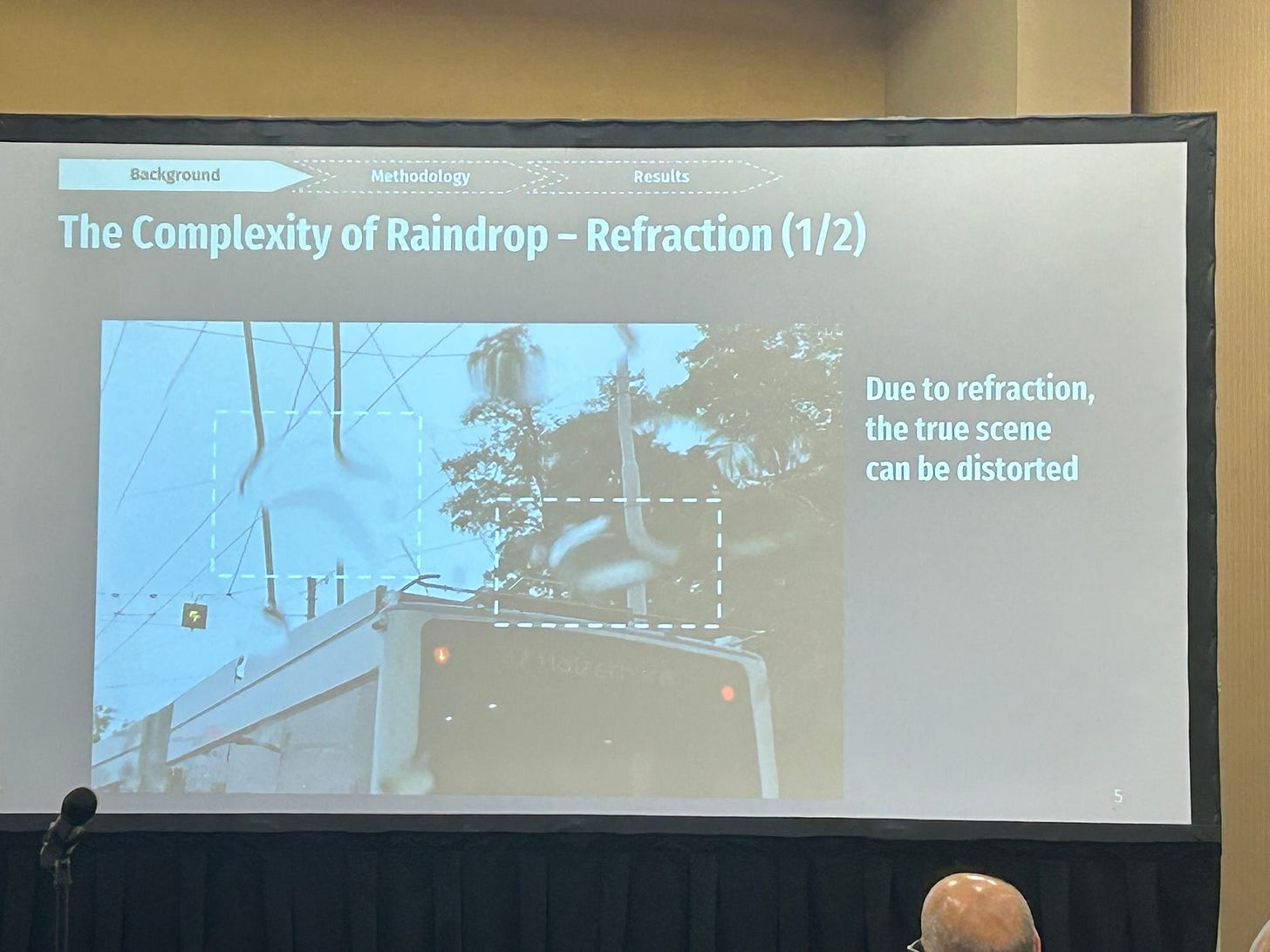

And while there were sections dedicated to AI ethics and papers about AGI, that was a relative sideshow. The overwhelming majority of papers were dedicated to practical applications of AI to existing problems. For example, there was a panel’s worth of papers just proposing different ways of using AI to “see” through raindrops that obscure the lenses of cameras for self-driving cars, methods that could be optimized depending on both the intensity and the size of the raindrops.

For these researchers, AI is simply a tool; or, more accurately, LLMs are only one particular tool in a much larger AI tool chest. From this perspective, AGI is merely a mildly interesting theoretical question. You don’t need AGI to see around raindrops, or to quickly assess the value of artworks for insurance companies, or to improve the efficiency and performance of resolution enhancing tools for photographs. (Also papers from the conference.) The future of AI is not one-size-fits-all AGI; it’s the deployment of a multitude of hyper-efficient, tailored AIs for boutique applications.

Moving beyond anecdote, the conference attendees resoundingly rejected some of the core assumptions baked into the AGI discourse. A survey of 475 attendees, which was just released in Nature, found that ~75% do not believe that further scaling of current AI systems, including LLMs, will ever get us to human-level reasoning. Less than a quarter believe that AGI should even be a core research goal. Thus, the median AI researcher disbelieves not only that AGI is imminent but that it is important. There is a wide gulf between AGI-obsessed influencers (as well as capital-hungry startup CEOs) and actual AI researchers.

I take this as an encouraging sign. AI doesn’t need AGI to be able to improve the human condition in demonstrable ways. AI doesn’t need AGI to make smarter robots, safer cars, and a thousand other better devices and systems. And the people who are building those things aren’t just sitting around, twiddling their thumbs, and waiting for the AGI eschaton to arrive.